Many You Tube videos about electronics are completely wrong

This statement about You Tube videos actually applies to a LOT of stuff posted on the Internet, not just electronics. And it, of course, also applies to other communications media, such as the printed word on paper or as read on a video monitor. It is becoming really hard to determine what to believe, or who to trust, in this century. Okay, it's always been hard to find the truth in any century, but the search for truth is an interesting endeavor in itself.

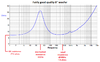

I have to learn it, if I want to learn about parasitic effects, because I want really fast logic.

If you want to learn about reeeealy faaaast logic, you need to learn about quantum computing and qubits. Folks who are learning how to build computers with qubits probably think it might be able to answer questions even before someone asks them. This could lead to AI smarter than God, unless He got there first. Just kidding. No one has built a practical qubit-based computer yet.

Problem is, currently you need to cool the "circuits" down close to absolute zero Kelvin before anything begins to work. Not exactly a home-brew project, unless you happen to have an ultra-high vacuum system (for heat insulation), a gold-plated whatsis for the electronics, plus a few PhDs to keep everything running.

Lots of images of the experimental hardware can be seen here.

Or, just Google "quantum computer images".

Parasitic effects were one of the many reasons that SPICE software modeling was invented during the initial development of integrated circuits. Back in the day, it was very expensive to perform the artwork layouts necessary to produce mass quantities of integrated circuits. Even with the artwork in hand, it could take weeks to process the wafers and build up the integrated circuit, layer by layer. Without some means to simulate the final product with a computer program, it could (and did) take months to go from concept to something that was tested, reliable, and could be sold in million-lot quantities for just of few pennies per device. Of course the "price per chip" didn't come down into the pennies range until late in the 20th century, after almost fifty years of experience and expanding competition.

Logic speed advanced with each generation of new logic devices. Resistor-Transistor-Logic (RTL) was soon replaced by Diode-Transistor-Logic (DTL) which yielded to Transistor-Transistor-Logic (TTL) in the 1960s. Then CMOS came along to sacrifice speed for low power consumption while Emitter-Coupled-Logic (ECL) hung in there as the speed champion for a long time. Eventually CMOS speeds matched TTL speeds, but by then discrete logic circuits were being replaced with chips containing more advanced circuitry, all on a single chip. Initially, there were some "false starts" on the way to the goal of a system-on-a-chip: early processors required a boatload of "glue" integrated circuits before anything useful could be accomplished.

And that brings us to about the beginning of the 21st century. Today, ICs can pack billions of transistors on each chip, and the dimensions of "features" such as transistors, diodes, resistors and conductors has shrunk to sub-micrometer levels, so small that soft x-rays are required to expose the photo-resist masks used to make the ICs. This is where you want to be, now and in the foreseeable future, if you want to get involved with the fastest logic available using electronics. The human brain is still the most complex, and the fastest, "computer" currently available but the input/output channels nature provided are too slow to take advantage of the processing power available. Plus, no one really knows how the human brain works or how to replace or repair a defective one. So, science must get along with the current Mark I model until someone figures out how to build a qubit computer to replace it. When, not if, that day finally comes, the first sentient artificial intelligence computer may appear, or so science fiction authors have long speculated.