The silicon camera sensor (probably a CCD) will have it's peak sensitivity at about 0.9μm or 900nm wavelength. This IS close to the high frequency end of the near-infrared spectrum, if we define that as the lower wavelength limit of human visual perception. This varies from person to person, probably a result of genetic selection. I can, for example, dimly see some infrared LEDs that to most people appear unlit. This may perhaps have been an advantage to hunting at night or exploring caves, but I have yet to find it useful in my daily life.

As for temperature versus wavelength... there is no such thing. At ANY given temperature, a body (blackbody or graybody or anything in between) emits a radiation spectrum that is continuous with a PEAK intensity that is a function of wavelength. Well, actually, the emission wavelengths are quantized into discrete wavelengths but the separation is so close that is appears to be continuous. It so happens that things at room temperature, or about 300K, have their peak emission at about 9.66μm, which happens to be quite close to a CO

2 laser wavelength of 10.6μm and smack dab in the middle of an mid-infrared atmospheric transmission window (one of two) that extend from 8μm to 14μm. There is another transmission window that extends from 3μm to 5μm. Both windows are useful for FLIR (Forward Looking Infra-Red) imaging.

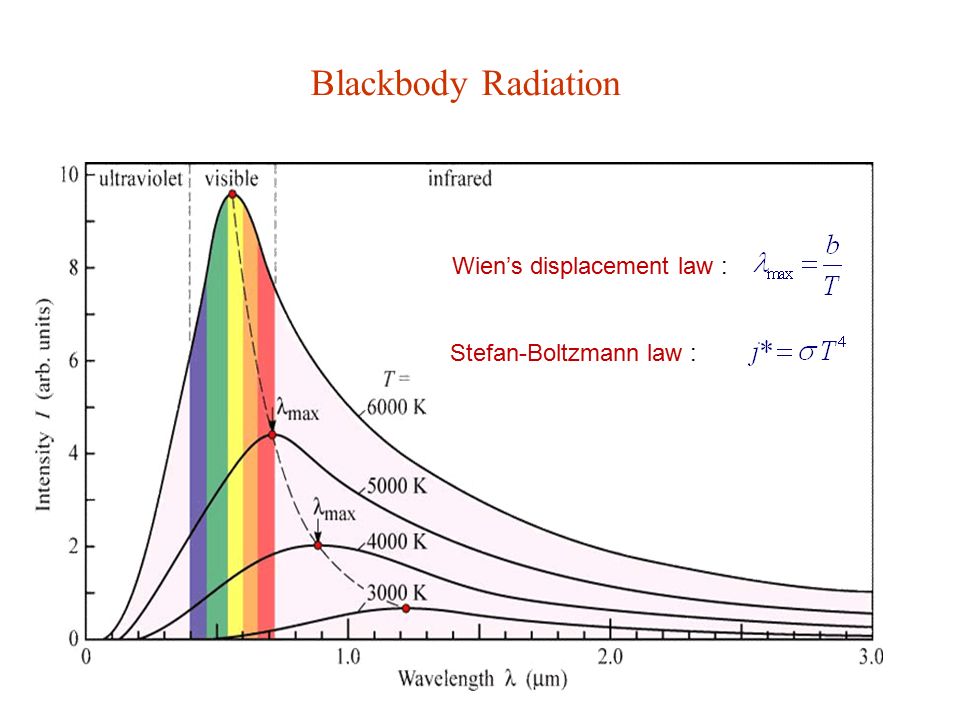

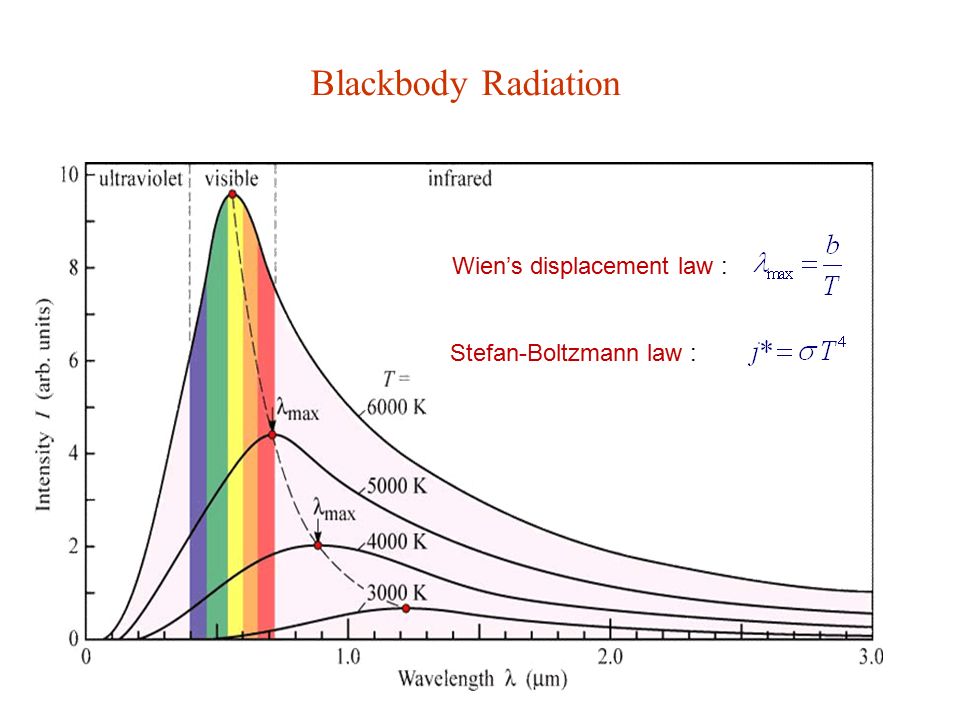

As the temperature increases the peak of the emission wavelength moves to shorter wavelengths:

The graph above shows peak wavelengths in the infrared for 300K, 400K, and 500K. At higher temperatures the whole ensemble shifts to shorter wavelengths with higher energy peaks that increase as the fourth power of temperature, always with a sharp fall-off at shorter wavelengths and a very long tail at longer wavelengths. In this graph the visible spectrum doesn't appear at all because it requires some pretty HOT temperatures to produce visible light... well, visible to the unaided human eye or most silicon sensors, but the radiation is nevertheless still present even if your eye or camera cannot "see" it. So, at 900nm or 0.9μm there is more than enough near-infrared energy coming through your window to "white out" the image.

The graph below shows the emission for wavelengths in the visible as a function of wavelength. Although the intensity scale is in arbitrary units on this graph, note that the curves for lower temperatures are entirely contained under the curves for higher temperatures. This is true across the entire electromagnetic spectrum, but note also the considerably lower intensities at longer wavelengths for any arbitrary temperature. That fact that the peak of the emission spectrum occurs near 10μm for objects near room temperature (roughly 300K) means there is almost no energy emitted at shorter wavelengths, in particular at the silicon detector peak sensitivity occurring at 0.9μm.

This rabbit hole is quite deep and becomes much more complicated when you consider reflectance, absorptance, transmittance, emmitance and scattering from real (not perfect blackbody) objects as functions of surface roughness, emissivity, wavelength and temperature. All of this didn't really become vitally important until the development of hyperspectral imaging sensors complicated the hell out of what an "image" represented.