Good Morning,

I have inherited a board design that will take Ethernet packets and convert the commands to analog voltages. My task is to make it work (as it doesn't currently work). I'm have an extremely hard time getting a specific part of the board to operate as I'd expect it to (and I assume as the original designer would expect). BLUF is that the 2.5 voltage reference device is spitting out 10V instead of 2.5V!!!! I presume it's a bad device, but I've changed it three times now with no success, I think it's frying as soon as I power up. See the details below.

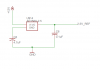

I have attached a schematic of the portion that is causing me to pull out my hair. You should know that the "2.5V_REF" is coming from an AD1582 voltage reference, the "DAC_OUT#" is the output of a DAC, feeding the OpAmp channels. The OpAmp is an LT1053 device and is powered (+V) by 12V and (V-) not connected (R-to-R is 0V to 12V). "An#_VO" simply fo to output pins that will drive an analog device off-board. Basically, the "client" expects a voltage between 2.5V and 7V (hence the 2.5V bias). Like I said up front, the issue is that the 2.5V_REF (used to bias) is putting out 10V instead of 2.5V. Thinking the OpAmp matrix was causing feedback, I used the extra OpAmp on the LT1053 as a buffer for the 2.5V reference (as noted in the attachment), but this did not fix the issue. I know there is a design issue, but nevertheless, I cannot figure out why this is happening.

I appreciate your help.

R/

Nick

I have inherited a board design that will take Ethernet packets and convert the commands to analog voltages. My task is to make it work (as it doesn't currently work). I'm have an extremely hard time getting a specific part of the board to operate as I'd expect it to (and I assume as the original designer would expect). BLUF is that the 2.5 voltage reference device is spitting out 10V instead of 2.5V!!!! I presume it's a bad device, but I've changed it three times now with no success, I think it's frying as soon as I power up. See the details below.

I have attached a schematic of the portion that is causing me to pull out my hair. You should know that the "2.5V_REF" is coming from an AD1582 voltage reference, the "DAC_OUT#" is the output of a DAC, feeding the OpAmp channels. The OpAmp is an LT1053 device and is powered (+V) by 12V and (V-) not connected (R-to-R is 0V to 12V). "An#_VO" simply fo to output pins that will drive an analog device off-board. Basically, the "client" expects a voltage between 2.5V and 7V (hence the 2.5V bias). Like I said up front, the issue is that the 2.5V_REF (used to bias) is putting out 10V instead of 2.5V. Thinking the OpAmp matrix was causing feedback, I used the extra OpAmp on the LT1053 as a buffer for the 2.5V reference (as noted in the attachment), but this did not fix the issue. I know there is a design issue, but nevertheless, I cannot figure out why this is happening.

I appreciate your help.

R/

Nick