Hello everyone!

I'm quite new to electronics. For one of my first projects, I decided to make a USB / Solar NiMH trickle charger. I decided to do this with only a diode and a resistor, as I do not need nor want quick charging, and while I know there are more complicated circuits to provide a more regulated constant current, I want to build something simple, and power efficient which can tolerate dips in voltage.

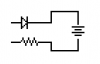

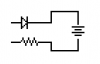

So, here's the simple circuit I came up with and built.

My Diode drops the voltage by about 0.7V, and my input voltage is about 4-5V. As I'm charging a pair of 2500mAH NiMHs, I selected a 25Ω 1W Resistor, which should get the current down to about 250mA at 5V.

...Or so I thought! The actual current I got was far, far less -- barely enough to power an LED or two!

I found that a 10Ω Resistor got me up to 140-160mA, but I dont understand why my calculations are so off. Did I place the resistor in the wrong part of my circuit, or am I not accounting for the resistance of the batteries? I tried measuring one of my batteries' resistance by selecting Ohms on my multimeter and probing the positive and negative terminals, but got no reading. They can't have that much resistance, because when I short the resistor, I get a very large current equal to roughly what my power source can supply.

What am I missing? Should I have calculated the resistor's value across the voltage of the two NiMH (2.4V) as opposed to the power source (5V), or the power source minus the diode (4.2V)? I'm a little confused as to what the resistor is acting on, and whether its function were to change if, say, I placed it before the diode, or at some other location.

I'm quite new to electronics. For one of my first projects, I decided to make a USB / Solar NiMH trickle charger. I decided to do this with only a diode and a resistor, as I do not need nor want quick charging, and while I know there are more complicated circuits to provide a more regulated constant current, I want to build something simple, and power efficient which can tolerate dips in voltage.

So, here's the simple circuit I came up with and built.

My Diode drops the voltage by about 0.7V, and my input voltage is about 4-5V. As I'm charging a pair of 2500mAH NiMHs, I selected a 25Ω 1W Resistor, which should get the current down to about 250mA at 5V.

...Or so I thought! The actual current I got was far, far less -- barely enough to power an LED or two!

I found that a 10Ω Resistor got me up to 140-160mA, but I dont understand why my calculations are so off. Did I place the resistor in the wrong part of my circuit, or am I not accounting for the resistance of the batteries? I tried measuring one of my batteries' resistance by selecting Ohms on my multimeter and probing the positive and negative terminals, but got no reading. They can't have that much resistance, because when I short the resistor, I get a very large current equal to roughly what my power source can supply.

What am I missing? Should I have calculated the resistor's value across the voltage of the two NiMH (2.4V) as opposed to the power source (5V), or the power source minus the diode (4.2V)? I'm a little confused as to what the resistor is acting on, and whether its function were to change if, say, I placed it before the diode, or at some other location.

Last edited: