hi everyone,

this is my first post here, so please forgive me if i've posted in the wrong place.

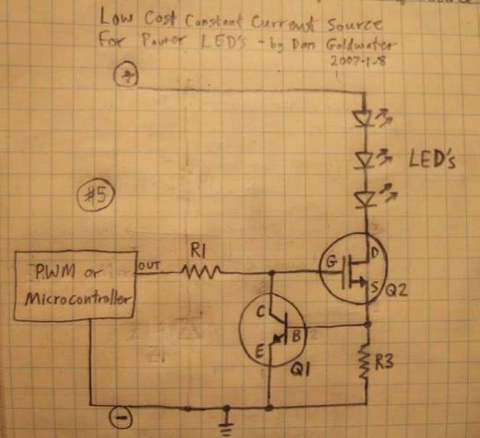

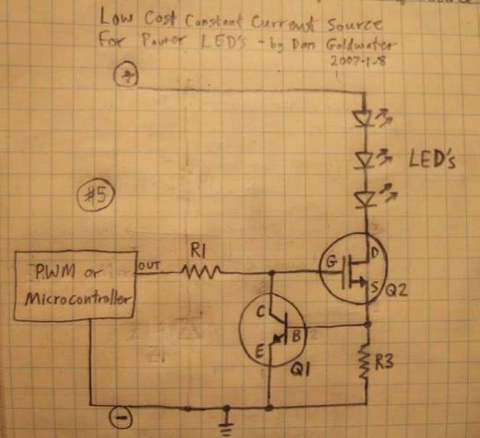

i'm developing a circuit that will use many LEDs, mixing ordinary 5mm leds with 350mA Luxeon Stars. i am using this circuit on Instructables (Circuit #5:

) to provide power for the LED, using a separate power input.

) to provide power for the LED, using a separate power input.

to control PWM, i'm using a Texas Instruments TLC5940 LED driver. this allows an adjustable maximum constant current to flow through leds connected at each of the output pins. i have the maximum current set at the moment to 1mA, and it works fine for standard 5mm leds of any voltage: the cathode of the LED goes to the OUTn pin of the TLC5940, and the anode goes to the +5V supply.

but, to control my Luxeons the driver circuit needs an input voltage sufficient to trigger the MOSFET (an IRFZ34E), and i'm having difficulty figuring out how make this work. i initially assumed i could put a resistor in place of one of the ordinary 5mm LEDs, with resistance R = V/I = 5V/1mA = 5kΩ and then put the ground and control voltage input from Luxeon driver across this LED, with the ground to the TLC5940 side of the resistor and the control input on the +5V side.

plugging in just the resistor and measuring the voltage across it gave reasonable enough readings across it (around 4.2V). but when i connected up the LED driver it didn't at all do what i was expecting: putting the ground from the external supply in particular seemed to kill the TLC5940, which i had to then power-cycle to get running again.

i'm in over my head here. could somebody give me some clues about what's going on, and what i might do instead of what i'm currently doing, which is clearly not the right thing to do

cheers!

d

this is my first post here, so please forgive me if i've posted in the wrong place.

i'm developing a circuit that will use many LEDs, mixing ordinary 5mm leds with 350mA Luxeon Stars. i am using this circuit on Instructables (Circuit #5:

to control PWM, i'm using a Texas Instruments TLC5940 LED driver. this allows an adjustable maximum constant current to flow through leds connected at each of the output pins. i have the maximum current set at the moment to 1mA, and it works fine for standard 5mm leds of any voltage: the cathode of the LED goes to the OUTn pin of the TLC5940, and the anode goes to the +5V supply.

but, to control my Luxeons the driver circuit needs an input voltage sufficient to trigger the MOSFET (an IRFZ34E), and i'm having difficulty figuring out how make this work. i initially assumed i could put a resistor in place of one of the ordinary 5mm LEDs, with resistance R = V/I = 5V/1mA = 5kΩ and then put the ground and control voltage input from Luxeon driver across this LED, with the ground to the TLC5940 side of the resistor and the control input on the +5V side.

plugging in just the resistor and measuring the voltage across it gave reasonable enough readings across it (around 4.2V). but when i connected up the LED driver it didn't at all do what i was expecting: putting the ground from the external supply in particular seemed to kill the TLC5940, which i had to then power-cycle to get running again.

i'm in over my head here. could somebody give me some clues about what's going on, and what i might do instead of what i'm currently doing, which is clearly not the right thing to do

cheers!

d