We wanted to use Tactigon and T-Skin to move a robotic arm and have it follow our movements.Raspberry is central units

Introduction

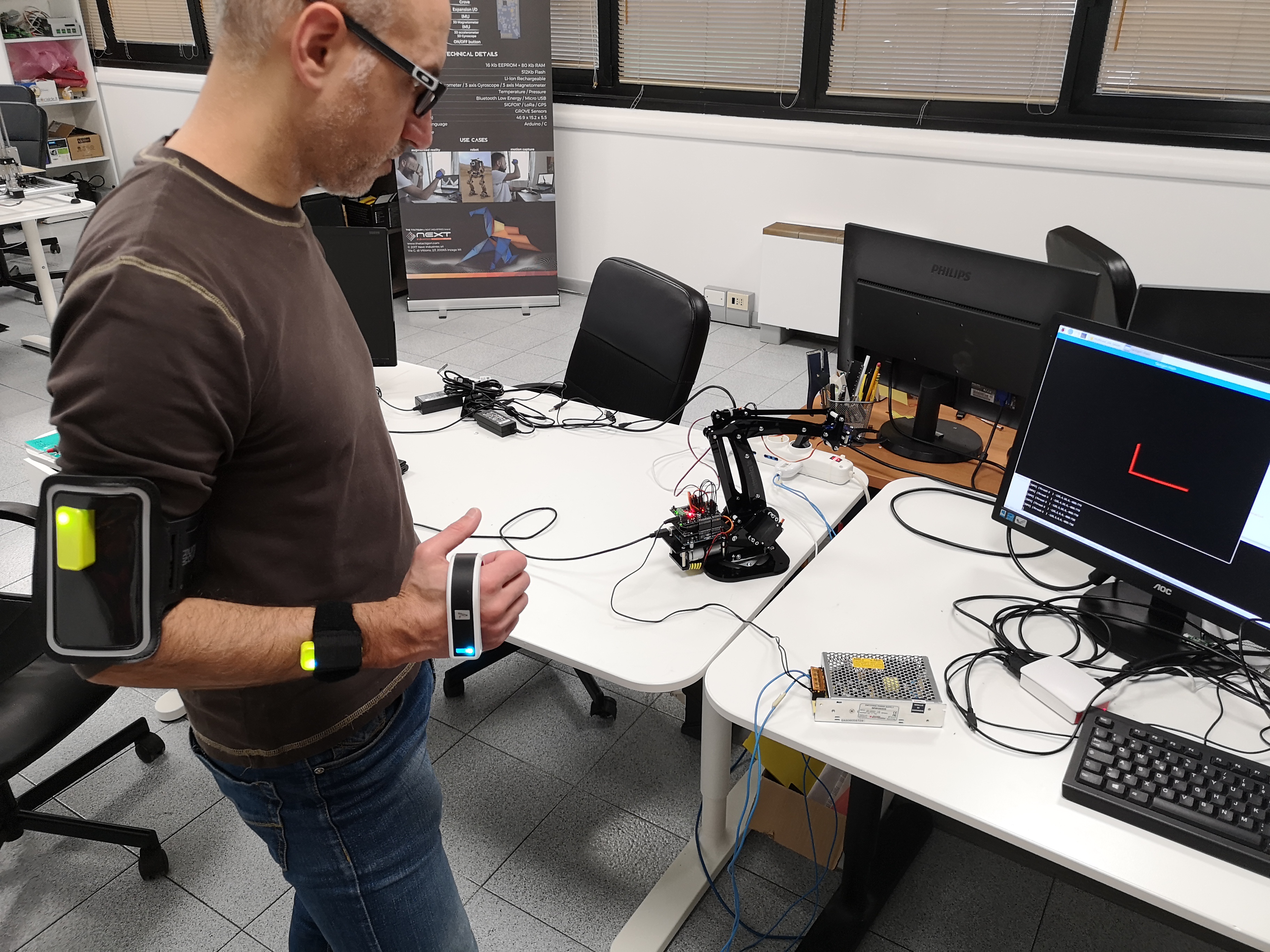

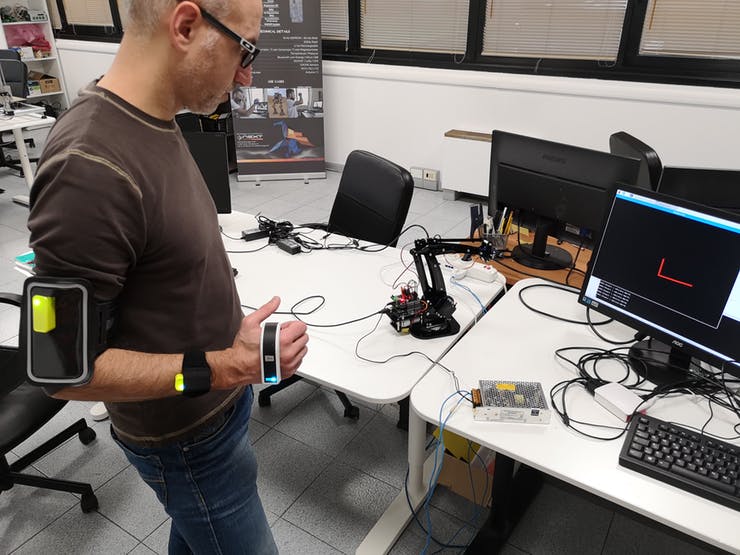

Hello! We made this project since we wanted to use The Tactigon ONE and T-Skin to move a robotic arm and have it follow our movements, like a shadow. By using two Tactigon ONEs (one on the arm and another on the forearm) along with a T-Skin (worn on the hand), we made this possible! Other devices we used are a Raspberry Pi 3, an Arduino Uno, a BLE and a servo shield, and obviously, a robotic arm.

https://youtu.be/kcBtqmV45r8

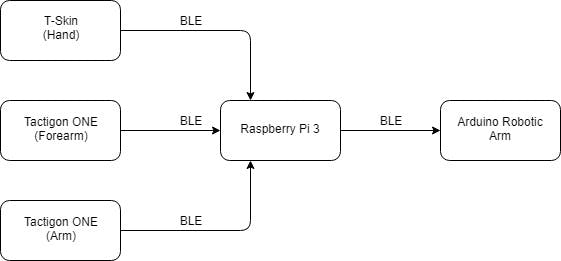

Hardware Architecture

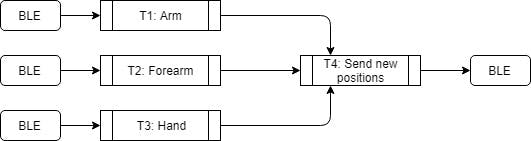

Lots of devices are connected here, so a little picture helps this:

The Raspberry Pi 3 plays a fundamental role: it runs handle inputs from the human arms, translates it in servos' positions and then send the new position to the robotic arm. Nice!

The Arduino robotic arm is equipped with a Bluetooth Low Energy shield, with whom receives new servos positions, and a "servo shield" which allows to uncouple Arduino power supply from servos power supply, and so use high torque servos without draining current over the microcontroller's limit.

First Integration

The first step was to assemble few cardboards to resemble an arm: 2 joints to emulate elbow and wrist. This was to speed up tests, and avoid unwanted movement of the arm.

After this step, we designed a little case with our favourite CAD and printed it out.

Ci siamo costruiti le scatole e abbiamo realizzato il pezzo finale

Software Architecture

Now that we know how our nodes are linked, we can analyze the software running on each of them.

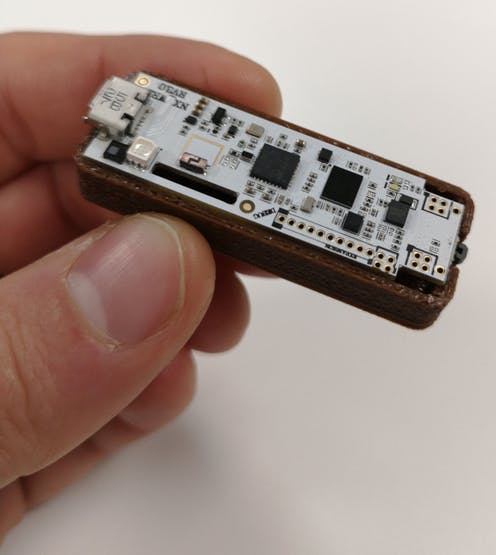

They run an Arduino Sketch which process IMU's data and transform to Quaternions. This data is then published over Bluetooth Low Energy.

The Raspberry Pi is running a Python script which uses 3 threads to communicate with Tactigon ONEs and T-Skin (T1, T2 and T3), a thread (T4) to handle all connections (and reconnect if needed), one for the UI (T5), and one (T6) to send servo positions to the robotic arm. T1 to T3 process data from quaternions to an angle, the new position of the arm.

The robotic arm is running an Arduino Sketch too, which receives servo positions and applies to its servos.

Conclusions

This shadow robotic arm is awesome since it can replicate human's movements with an higher torque (well, not the small unit we used in this test tho!).

Next step is to make it able to learn the movements, optimize them and replicate endlessly for Industry 4.0 purpose.