Get to know the Jetson Nano in this face detection project using the Raspberry Pi camera and OpenCV!

The Jetson Nano is a GPU-enabled edge computing platform for AI and deep learning applications. It is powered by a 64-bit quad-core ARM-CortexA57 CPU with 4 GB RAM onboard. It has 128 core Nvidia Maxwell GPU dedicated to several AI and deep learning applications making it suitable for prototype development as well as production.

The Jetson Nano developer kit which houses the Nano module, accessories, pinouts, and ports is ready to use out of the box. It runs a customized Ubuntu 18.04 called Linux4Tegra for the Tegra series chips powering the Nvidia Jetson modules.

As mentioned in the Introduction to Jetson Nano article, the developer kit has a mobile industry processor interface (MIPI) powered camera serial interface (CSI) port that supports common camera modules like the Raspberry Pi camera v2 or the Arducam camera. These cameras are small but suitable for machine learning and computer vision applications like object detection, face recognition, image segmentation, visual odometry estimation using the system on chip (SoC) platforms. Commercial USB cameras are also suitable for the task but are prone to latency and transmission overheads.

For the face detection task in this tutorial, we are using the RPi camera module v2. It is powered by a Sony IMX219 8-MP sensor. It supports still images as well as high-definition videos with different resolutions including 1080p@30FPS, 720p@60FPS, and VGA90.

Required Components

Connecting the Camera to the Jetson Nano

- Setup the Jetson Nano Developer Kit using instructions in the introductory article.

- Add the keyboard, mouse and display monitor.

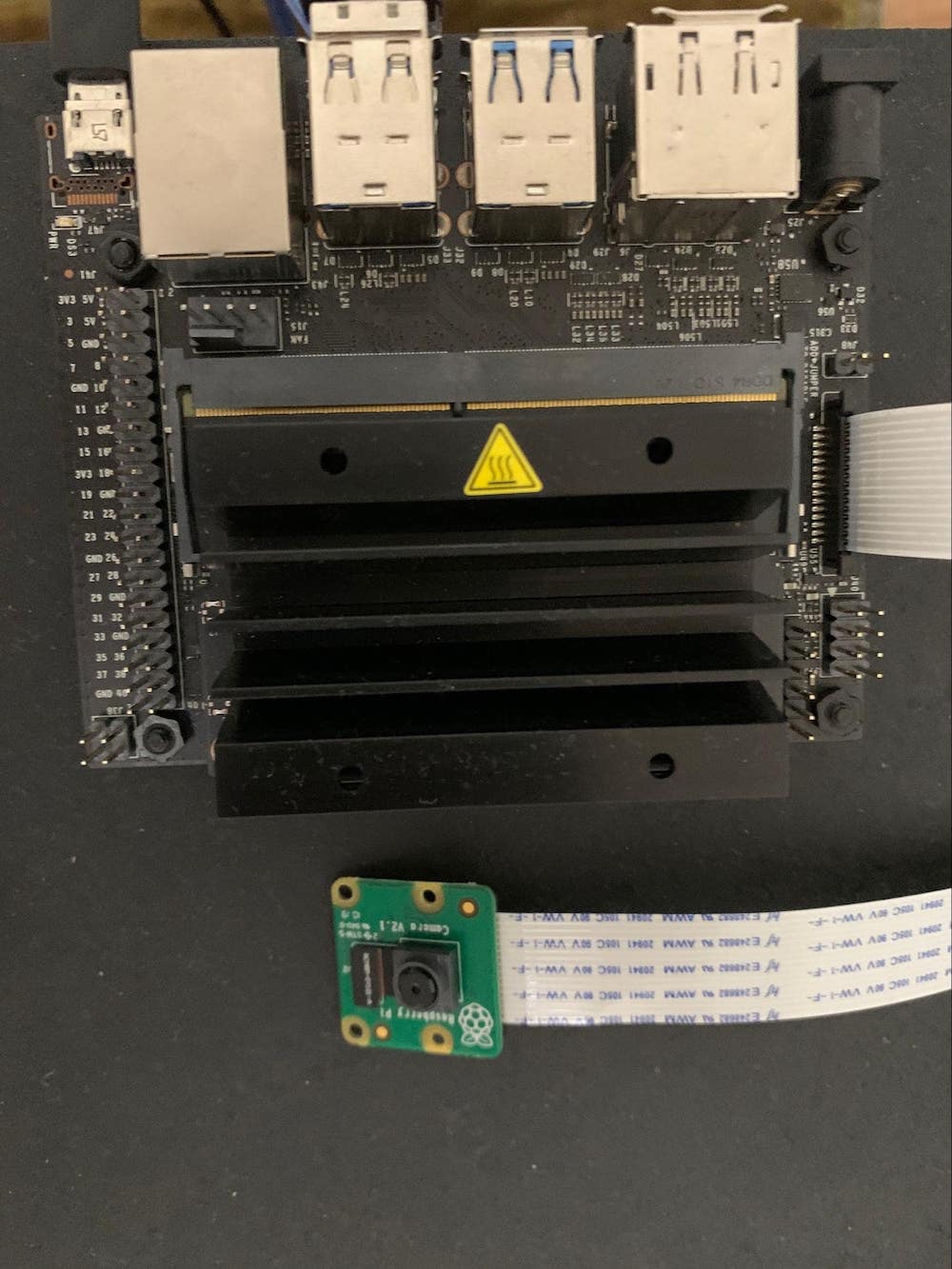

- Pull the CSI port and insert the camera ribbon cable in the port. Make sure to align the connection leads on the port with those on the ribbon. The connections on the ribbon should face the heat sink.

- Power up the developer kit.

- Verify that the camera is correctly configured and recognized.

Connecting the camera ribbon cable with the CSI port.

The JetPack SDK provided by Nvidia already supports the RPi camera with preinstalled drivers and is readily usable as a plug-and-play peripheral taking away the pain of driver installations and compatibility issues.

You cannot use Cheese, the inbuilt GNOME application for recording videos and taking pictures on the Ubuntu OS, to check the camera connection. This is because the RPi camera is not inherently UV4L compatible and is not identified by default Linux applications.

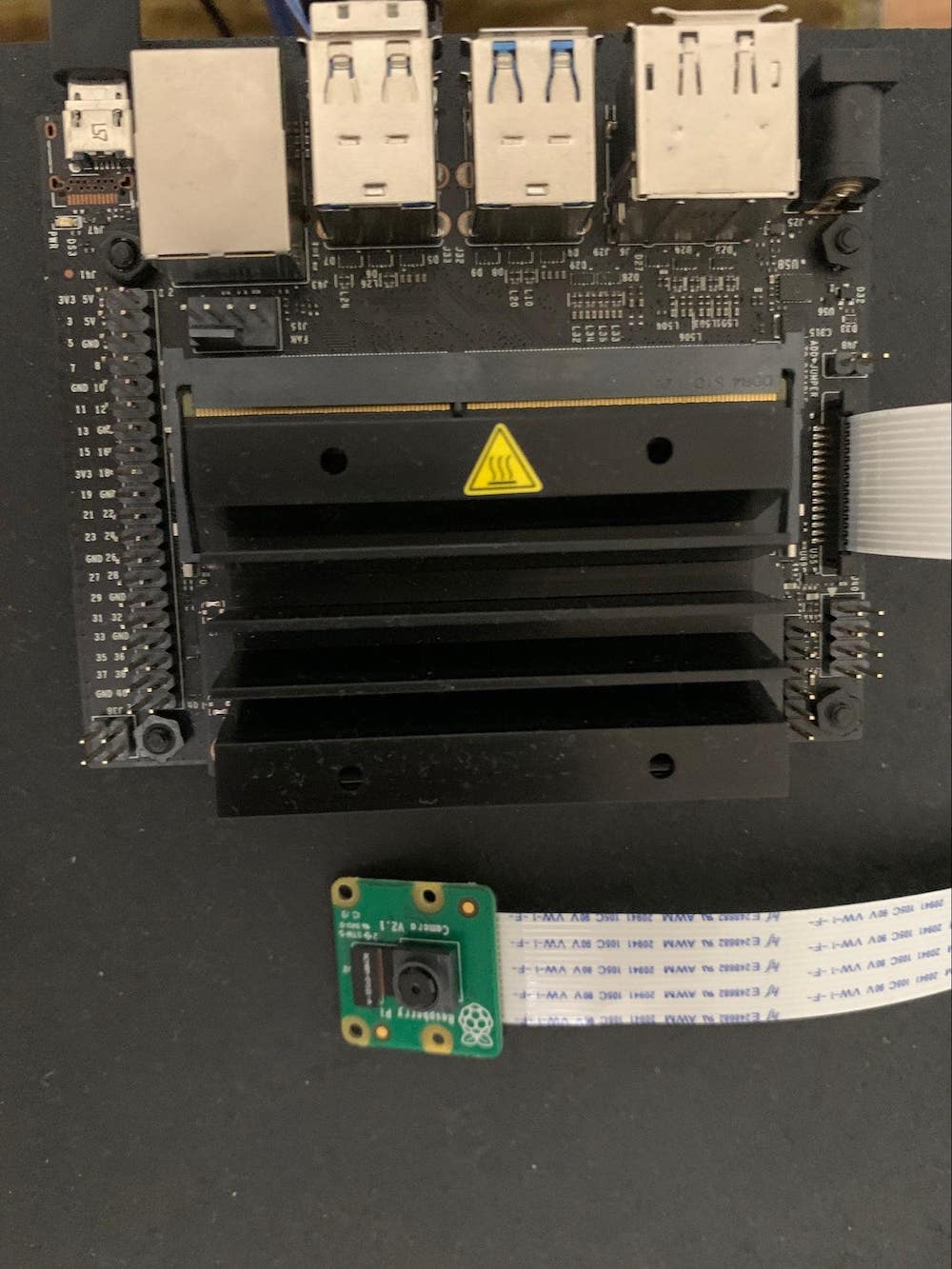

Raspberry Pi Camera Module V2 with Jetson Nano Developer Kit

A simple command-line instruction to check for the camera connection is:

akshay@jetson_nano:~$ ls -l /dev/video0

Crw-rw----+ 1 root video 81, 0 Jan 2 14:30 /dev/video0

ls is the command to list the contents of directory or location. -l attribute displays the content in the long list format with information about the permissions, user, owner of the file, and more. /dev/video0 is the location where all device files or special files in Linux are stored.

If the output of the command is blank, then it suggests that there is no camera attached to the developer kit. The Nvidia Jetson series uses the GStreamer pipeline for handling media applications. GStreamer is a multimedia framework and used for backend processing tasks like format modification, display driver coordination, and data handling. The same will be used here with a Raspberry Pi camera.

The even nerdier way is to check for the camera connection is to use the GStreamer application gst-launch to launch a display window and confirm that you can see a live stream from the camera.

akshay@jetson_nano:~$ gst-launch-1.0 nvarguscamerasrc ! 'video/x-raw(memory:NVMM),width=3820, height=2464, framerate=21/1, format=NV12' ! nvvidconv flip-method=0 ! 'video/x-raw,width=480, height=320' ! nvvidconv ! nvegltransform ! nveglglessink -e

The command above creates a GStreamer pipeline with elements separated by ! that define the properties of the pipeline. It launches a display window of width 480 and height 320 with 10 FPS and several other Nvidia configuration parameters (outside the scope of this article). Details about the different elements of the pipeline can be found at the documentation website for gst-launch.

Setting Up OpenCV

OpenCV is an open-source computer vision library natively written in C++ but with wrappers for Python and Lua as well. The JetPack SDK on the image file for Jetson Nano has OpenCV pre-installed. OpenCV has already trained models for face detection, eye detection, and more using Haar Cascades and Viola Jones algorithms. The OpenCV website has a tutorial for the implementation of the algorithm.

Use Python in the terminal to confirm OpenCV installation and its version.

akshay@jetson_nano:~$ python

Python 2.7.15+ (default, Oct 7 2019, 17:39:04)

[GCC 7.4.0] on linux2

Type “help”, “copyright”, “credits” or “license”, for more information

>>>import cv2

>>> cv2.__version__

‘3.3.1’

Simple Python Application for Face Detection

import cv2

import numpy as np

HAAR_CASCADE_XML_FILE_FACE = "/usr/share/OpenCV/haarcascades/haarcascade_frontalface_default.xml"

GSTREAMER_PIPELINE = 'nvarguscamerasrc ! video/x-raw(memory:NVMM), width=3280, height=2464, format=(string)NV12, framerate=21/1 ! nvvidconv flip-method=0 ! video/x-raw, width=960, height=616, format=(string)BGRx ! videoconvert ! video/x-raw, format=(string)BGR ! appsink'

def faceDetect():

# Obtain face detection Haar cascade XML files from OpenCV

face_cascade = cv2.CascadeClassifier(HAAR_CASCADE_XML_FILE_FACE)

# Video Capturing class from OpenCV

video_capture = cv2.VideoCapture(GSTREAMER_PIPELINE, cv2.CAP_GSTREAMER)

if video_capture.isOpened():

cv2.namedWindow("Face Detection Window", cv2.WINDOW_AUTOSIZE)

while True:

return_key, image = video_capture.read()

if not return_key:

break

grayscale_image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

detected_faces = face_cascade.detectMultiScale(grayscale_image, 1.3, 5)

# Create rectangle around the face in the image canvas

for (x_pos, y_pos, width, height) in detected_faces:

cv2.rectangle(image, (x_pos, y_pos), (x_pos + width, y_pos + height), (0, 0, 0), 2)

cv2.imshow("Face Detection Window", image)

key = cv2.waitKey(30) & 0xff

# Stop the program on the ESC key

if key == 27:

break

video_capture.release()

cv2.destroyAllWindows()

else:

print("Cannot open Camera")

if __name__ == "__main__":

faceDetect()

Code Description

The code basically uses pre-trained Haar Cascade implementations stored in the OpenCV library. As mentioned earlier, the code uses the GStreamer pipeline to create an interface between the camera and the OS. The pipeline is used to create a VideoCapture() object.

Each image frame from the camera live stream is processed and tested for face detection. The code implements an additional step of converting the color image to a grayscale image since the color does not determine the facial features. This avoids computational overheads and enhances performance. Once confirmed if the image contains a human face, a rectangle is drawn around the boundaries.

Results and Conclusions

The Jetson Nano developer kit is a powerful platform but still prone to unoptimized code and routines. A low FPS of 10 was used to avoid computational load. This Haar Cascade based implementation is a rudimentary algorithm for face detection and several advanced machine learning algorithms have been developed since. Also, this implementation does not salvage the GPU available on Jetson Nano.

The next step to learn basic implementations of CUDA-based parallel programming on Jetson Nano and then a deep learning-based solution for face/object detection using TensorRT on the Jetson Nano GPU.