Digitizing emotions for remote transfer and to incorporate as feedback to virtual reality platforms.

Introduction

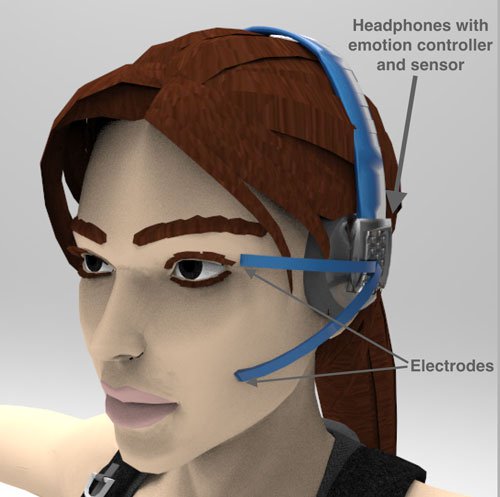

The following article is based on the learnings I gained from a short study I did on existing research to understand the possibility of digitising our emotions for remote transfer between individuals and to incorporate it as feedback to virtual reality platforms. My concept was to introduce a wearable emotion interface that can measure our emotions through facial EMG (electromyography) and consequently digitise and transfer them to another remote individual wearing the same wearable headset and invoke the similar feelings. Invoking an emotion is a concept that could be achieved through facial EMS (Electrical Muscle Stimulation). Moreover, this system can be used with Virtual Reality to use the emotions of a person as feedback to the platform and even invoke genuine emotions through it. Thereby improving the immersive experience for Virtual Reality platforms using psychophysiology.

For example: the emotional episode of a person sitting in California could be remotely experienced (feel similar emotions) by a person based in India through an advanced wearable headset.

All the research work that has been referred can be found here and the pdf version of this article can be viewed here. This is the structure of the rest of the article:

Contents

1. Problem statement2. Corroborating the concepts3. Dimensions of emotion4. Conceptual prototyping: Facial EMG and EMS Sensing of emotion through facial EMG a. Physiological effect of emotion on facial muscles b. Facial EMG measurement Electrical stimulation of muscles to invoke emotions5. Similar researchInteresting research: Measure emotion using 6. Galvanic Skin Response

7. Further research

8. Applications of the proposed wearable emotion interface

9. Conclusion

1. Problem Statement

Today in the 21st century, people are capable of talking with each other from different parts of the globe. This is done mainly through instant messaging systems, emails or other text based platforms. And they rely only on emoji’s :) ;) :( for conveying emotions.

Today with the advancement of futuristic technologies, it is due time we created a platform that can understand and evoke emotions within people. A wearable interface that can allow remote transfer of emotions between people and which can help in making the communication more organic is thus required.

Check this small snippet from the movie “Martian” that clearly explains the problem statement:

2. Corroborating the Concepts

The research work done till date will be used to corroborate the feasibility of the two part system:

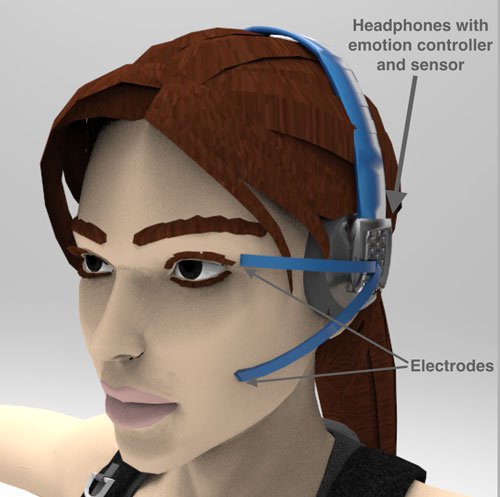

a. Sensing of emotion through facial EMG.b. Electrical stimulation of muscles to invoke emotions.a. Sensing of emotion through facial EMGDr.Paul Ekman, a noted American Psychologist has researched for more than 40 years on the emotions and facial expressions of people. He found out that all the emotions we experience are associated and mapped to a specific facial expression or group of facial muscles: Ekman, P., Friesen, W. V., & Ancoli, S. (1980). Facial Signs of Emotional Experience. Journal of Personality and Social Psychology, 39(6), 1125-1134.As part of his research, he had studied and worked with the native tribes of New Guinea, who haven’t had any interaction with the outside world before him. Through the interaction with them, he learned that the expressions made by them can be easily predicted just like any other person and that every human is programmed to have the same expressions. Thus it has been proved that the emotions made by any human is universal and can be easily understood by any other human by reading their face. His work is a modern day extension of the research done by Charles Darwin and Tomkins on breaking down human facial expressions.

This opens up a huge avenue for emotion based communication interfaces and a veritable platform capable of understanding the emotions of any individual from the world irrespective of their origin or the language they speak.

To sense a person’s emotion, there are two ways: self report and concurrent expression. Self report is what we use normally while IM’ing, we use emojis to report our feelings, for doing this we have to spare time to stop what we are doing and report it. However, the system I propose uses concurrent expression, where the user’s emotion is recorded unconsciously and the genuine feeling is recorded and transmitted. (Picard, R.W.: Toward computers that recognize and respond to user emotion. IBM Systems Journal 39 (2000) 705–719 )

The sensing of our facial expressions and thus our emotions can be done by measuring the muscle activity in our face. Facial Electromyography will be used to detect muscle activation and corresponding emotions that is invoked in an individual. Research done by U Dimberg has corroborated that Facial EMG is an accurate technique for sensing a person’s emotions: Dimberg, U. (1990), For Distinguished Early Career Contribution to Psychophysiology: Award Address, 1988. Psychophysiology, 27: 481–494. doi: 10.1111/j.1469-8986.1990.tb01962.x.

b. Electrical Stimulation of Muscles to Invoke Emotions

Along with this concept, I am also researching on whether we can also evoke genuine emotions through a wearable interface. For corroborating this concept, I rely on Dr.Paul Ekman’s work, wherein he found that voluntary activation of the facial muscles concerned with any particular emotion can in turn evoke that emotion.

The concept which triggered the second part of my idea is based on his experiment where a person can trigger a genuine emotion by voluntarily recreating the facial expression associated with it, as proved in his work: Voluntary smiling changes regional brain activity Psychological Science, Vol. 4, No. 5. (1993), pp. 342-345 by Paul Ekman, Richard J. Davidson.

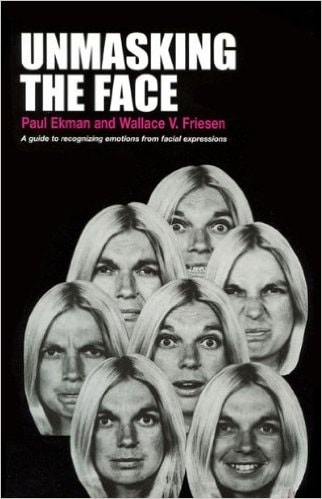

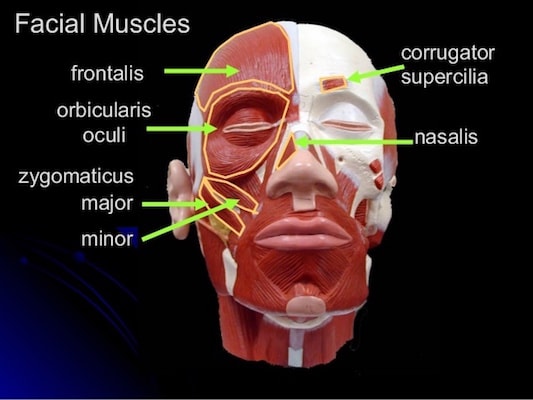

The conceptual wearable emotion interface will be capable of activating the muscles involved in natural smiling as per the Duchenne marker (Duchenne de Boulogne GB. In: The Mechanism of Human Facial Expression. Cuthbertson RA, translator and editor. New York: Cambridge University Press; 1990), which states that the facial muscles: Zygomatic Major and Orbicularis Oculi are involved in our natural smile. You can read about them in detail in section 4, part 1.

On stimulating these facial muscles through electric currents, corresponding emotions have the potential to be recreated in the individual. Prior research had been done to figure out if facial muscle stimulation can trigger emotions through this paper: Zariffa, J., Hitzig, S. L. and Popovic, M. R. (2014), Neuromodulation of Emotion Using Functional Electrical Stimulation Applied to Facial Muscles. Neuromodulation: Technology at the Neural Interface, 17: 85–92. doi: 10.1111/ner.12056. According to which, researchers found out that emotions such as: daring, concentration and determination were brought out in individuals.

3. Dimensions of Emotion

Here, emotion can be described as a point in two dimensional space of affective valence and arousal. Valence is used to describe our positive (happiness based) and negative (sadness based) emotions and arousal is used to express our intensity levels from calm to excited, even fear or anger can be included in this. Neutral condition of emotion can also be incorporated.

There is also a concept called mixed emotions where a person experiences simultaneous emotions. For example; a person feels happy when he sees his old grandmother after a long time but at the same time he is sad because of her deteriorating health. Four emotion categories were used for testing in the research stated below: neutral, mixed, positive and negative (valence only).

Moreover, it was found that the EMG of frontalis muscle is insignificant with emotions. Zygomaticus major muscle is the most discriminatory signal and its mean, absolute deviation, standard deviation and variance was calculated. Reference: Computing Emotion Awareness Through Facial Electromyography, Broek, Egon L, Schut, Marleen H, Westerink, Joyce H. D. M, Herk, Jan, Tuinenbreijer, Kees, Huang, Thomas S, Computer Vision in Human-Computer Interaction: ECCV 2006 Workshop on HCI, Graz, Austria, May 13, 2006. Proceedings

4. Conceptual Prototyping: Facial EMG and EMS

1. Sensing of Emotion Through Facial EMG

Here, an EMG sensor could be used along with a platform like Arduino to sense facial muscle activation. Using this setup, different muscles on the face will be tested which corresponds to different emotions. Paul Ekman’s work: Unmasking the Face could be used as a guide to understanding the various emotions and the underlying muscles involved for displaying them on the face. Following muscles are associated with the given emotions:

Happiness: Zygomatic major and orbicularis oculi

Sadness/anger: Corrugator muscle

They can be visualized as shown: (reference video)

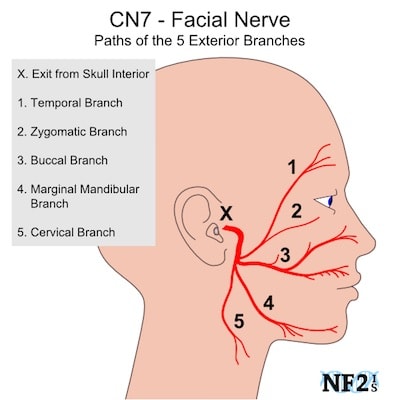

Interesting Fact: All the muscles in the face are controlled by the VII cranial nerve (CN VII) i.e., the facial nerve. And research could be done to find whether this nerve can be tapped to decode our facial expressions from a single point.

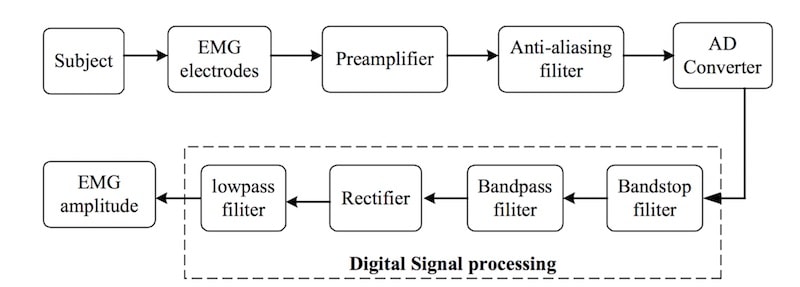

The research paper: H. C. Ning, C. C. Han, C. H. Yuan, “The Review of Applications and Measurements in Facial Electromyography,” Journal of Medical and Biological Engineering, vol. 25, pp 15-20, 2004 also helped in understanding the process involved in measuring facial EMG. This paper talks about the measurement problems seen in recording facial EMG. It has divided the process of recording into three stages:

- Electrode selection and placement

- Facial EMG recording

- Signal conditioning

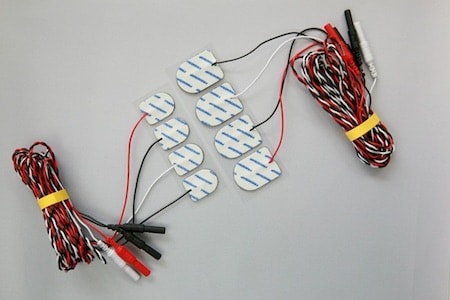

Surface EMG being non-invasive is very safe and easy to use compared to invasive methods even though it experiences crosstalk signal originating from surrounding muscles and signal to noise ratio is less. However, safety is more important than accuracy, so most people adopt non-invasive surface electrodes (Fridlund AJ and Cacioppo JT, "Guidelines for human electromyographic research," Psychophysiology, 23(5): 567-589, 1986) ( Cole KJ , Ko, packi RA, Ab, and JH, "A miniature electrode for surface electromyography during speech," J Acoust Soc Am, 74: 1362-1366, 1983)

a. Physiological effect of emotion on facial muscles

The paper: Larsen JT, Norris CJ, Cacioppo JT (September 2003). "Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii". Psychophysiology 40 (5): 776–85.doi:10.1111/1469-8986.00078.PMID 14696731 has helped me in understanding in detail the effects of emotion on our facial muscles, mainly zygomaticus major and corrugator supercilli. The following learning is based on the above mentioned research.

Pleasant stimuli exhibits greater EMG activity over the Zygomaticus Major than the Corrugator Supercilii than do unpleasant stimulii.

Corrugator supercilii is sparsely represented in the motor cortex and is therefore likely to be involved in fine voluntary motor behaviours such as articulation and nuanced display designed to mask affective reactions. Zygomaticus major and other neighbouring parts are well represented in the motor cortex, affording it greater involvement in display rules and other fine voluntary motor behaviours. Moreover, like the muscles of the back, the corrugator tends to be bilaterally innervated, another characteristic that impedes fine voluntary motor control. In contrast, the zygomaticus like the muscles of our fingers offers greater contralateral innervation.

The zygomaticus offers a quadratic effect of valence rather than the linear effect. Activity over zygomaticus major appears to be characterized by a threshold effect and few stimuli in the current study exceeded that threshold.

When people were exposed to different pictures to record their emotions, very negative and mildly negative stimuli elicited greater activity over corrugator supercilii muscle than did neutral pictures. And very positive pictures elicited less activity than neutral pictures.

Very positive pictures potentiated activity over zygomaticus major and neutral pictures inhibited activity. Due to this inhibition, mildly positive and very negative pictures elicited greater activity over zygomaticus than neutral pictures. Increase in self reported negative affect potentiated activity over corrugator muscle whereas increase in self reported positive affect inhibited activity.

Increase in positive affect potentiated activity in zygomaticus but increase in negative effect had little effect. Showing that it is not a reciprocal relationship. Thus with respect to affective variables (positive, negative), activity over zygomaticus major may give greater specificity than activity over corrugator.

Their findings that positive affect decreases and negative affect increases activity over corrugator supercilii suggests that an ambivalent stimulus may have antagonistic effects on activity over corrugator supercilii, resulting in little change in activity. In contrast, findings that positive affect increased and negative affect had no effect on activity over zygomaticus major suggests that ambivalent stimuli may increase activity over zygomaticus major.

b. Facial EMG measurement

Electrode Specification for Facial EMG

In EMG measurements, all recording electrodes should be made of the same material to minimize half-cell potential differences. Circular electrode made with Ag/AgCl is most often adopted (Hermie J Hermens, Bart Freriks, Catherine Disselhorst-Klug, and Gunter Rau, "Development of recommendations for SEMG sensors and sensor placement procedures," Journal of Electromyography and Kinesiology, 10: 361-374, 2000.)

Best site for electrode placement

The best site for electrode placement is the midline of the muscle belly (C.J.De Luca, "The use of surface electromyography in biomechanics," Journal of Applied Biomechanics, 1997). Lapati et al suggested that we could find the detail muscle position by touching the contracting muscles (Lapatki, B. G., Stegeman, D. F., and Jonas, I. E., "A surface EMG electrode for the simultaneous observation of multiple facial muscles," Journal of Neuroscience Methods, 123(2): 117-128, 2003)

Reducing measurement errors

Movement/deformation of skin under the electrode can result in measurement error by changing the skin-electrode impedance. To reduce this, the most convenient method is to use separating conductive gel. Any mechanical disturbances caused between the electrode and skin can be damped by this intermediate gel.

To reduce power line interference and clear power noise in measurement, shielding the electrode lead wires and measurement devices is done.

Likelihood of crosstalk from other muscles can be eliminated by attaching the electrode in the midline of the muscle belly. The double differential technique of using three electrodes can eliminate crosstalk. A block diagram of the signal acquisition process (from H. C. Ning, C. C. Han, C. H. Yuan, “The Review of Applications and Measurements in Facial Electromyography,” Journal of Medical and Biological Engineering, vol. 25, pp 15-20, 2004):

Interesting fact: Facial EMG was recorded for different taste effects. Subjects described the hedonic sense and facial EMG activities in the levator labii superioris/alaeque nasi muscle regions were recorded. And thus experiments showed that facial EMG could be used as a palatability factor (Hu, S., Player, K. A., Mcchesney, K. A., Dalistan, M. D., Tyner, C. A., and Scozzafava, J. E., "Facial EMG as an indicator of palatability in humans," Physiology & Behavior, 68(1): 31-35, 1999).

2. Electrical Stimulation of Muscles to Invoke emotions

On the other hand to output emotions by stimulating the facial muscles (zygomatic major and orbicularis oculi) we can use electric stimulation through the existing methods of EMS (electronic muscle stimulation) or TENS (Transcutaneous Electric Nerve Stimulation).

EMS is mainly used for treating muscle fatigue, by helping the muscles contract and relax. Whereas TENS is mainly used to suppress pain by interfering with the nerves and their signals. Experimentation has to be done to find out which of the two methods are effective in recreating emotions. A proposed EMS machine. Voltage in the range of DC 12V and current in the range of 0-100mA is usually applied in pulses through these systems.

5. Similar Research

Facial Performance Sensing Head-Mounted Display

Hao Li, Laura Trutoiu, Kyle Olszewski, Lingyu Wei, Tristan Trutna, Pei-Lun Hsieh, Aaron Nicholls, Chongyang Ma ACM Transactions on Graphics, Proceedings of the 42nd ACM SIGGRAPH Conference and Exhibition 2015, 08/2015 – SIGGRAPH 2015

Here, as part of a research done by Oculus and Facebook, researchers have figured out a way to allow face to face communication in virtual worlds. Since head mounted displays (HMD) occlude our face, cameras cannot be used to record the facial expressions in the upper part of our face. Hence, they have created a system where the inner foam lining of the HMD is embedded with strain gauges that record facial muscle activity. A camera is also used to record the facial expressions near the area of our mouth. Check out their demo video:

2. Wearable Device for reading facial expressions

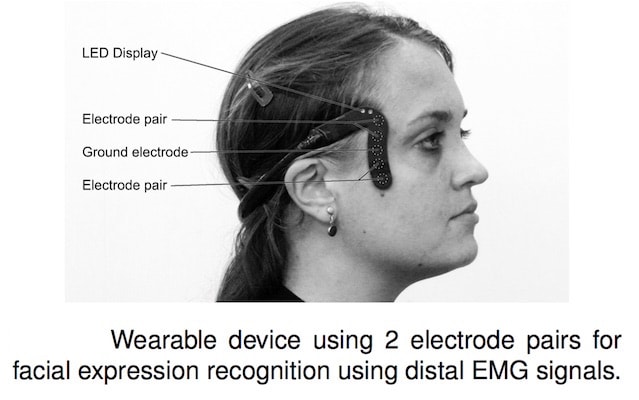

Gruebler, A., Suzuki, K., “Design of a Wearable Device for Reading Positive Expressions from Facial EMG Signals“, IEEE Transactions on Affective Computing, (accepted), 2014.

This wearable device measures distal EMG signals (signals from a particular distance away from the facial muscles) and estimates emotions using that data. Since the data was not taken directly from the affected muscles, techniques like independent component analysis and artificial neural networks have been used to achieve a high emotion recognition rate.

3. Expression Glasses from MIT Media Lab

Scheirer, J., Fernandez, R., & Picard, R. W. (1999). Expression glasses a wearable device for facial expression recognition. Computers in Human Interaction (pp. 262-264). Pittsburgh, USA.

Another interesting research to sense a person’s emotion was: “Expression Glasses”. This wired system uses piezoelectric sensors on the forehead to detect either confusion or interest is evoked in a person and the system is modelled in the form factor of spectacles. This is mainly focussed on obtaining feedback for different processes. The wired system, not truly a wearable relied on a windows 95 based desktop having labview to process the information.

6. Interesting research: Measure Emotion Using Galvanic Skin Response

Based on: The Galvactivator: A Glove that Senses and Communicates Skin Conductivity In Proceedings of the 9th International Conference on HCI (2001), pp. 1538-1542 by Picard, J. Scheirer

The Galvactivator is a device which measures electrodermal response or skin conductivity, this response is directly associated with our emotional arousal and can be a good predictor of emotions like: attention & excitement. The conductivity of our skin effectively changes based on the intensity of our emotional state and thus the researchers have compressed the form factor of the system to a wearable and enabled the measure of responses for a person engaged in everyday activities and made it free from the tethered laboratory setup.

However, electrodermal response makes it difficult to predict what made you excited unless several potentially confounding factors are controlled. It requires a person to remain seated in a place where temperature and humidity are fairly constant and there is minimal physical activity and the wearable shouldn’t be tampered with. This further bolsters the need for a more robust and accurate wearable that can effectively track our emotions and which is why the current research is pursued.

7. Further Research

In order to successfully create a wearable headset capable of tracking emotions, further research has to be done on the following areas:

- To create a minimalistic design for a headset/individual micro sticker modules that can be placed in contact with the facial muscles for sensing. Thus eliminating the labyrinth of wires usually associated with the system.

- Research can also be done to find out if there is a central point on our face or elsewhere, through which all the facial muscle’s electrical activity can be sensed. This idea is akin to the myo band, where a single area of the arm is used for detecting a wide range of hand gestures (tapping the facial nerve or CN7 for data is a possibility).

- Find correlation between body’s autonomic activity to certain emotions. Research should also be done to incorporate sensors that can measure galvanic skin response, heartrate, sweat gland, respiration, blood pressure, body temperature on to the wearable headset.

8. Applications of the Proposed Wearable Emotion Interface

VR - In Virtual Reality, more and more technologies are being developed to make the experience more real and organic, starting from omnidirectional treadmills to body movement trackers. However, there still hasn’t been any system that has been developed that can convey the emotion of the person in real time to the system. An emotion based feedback to the system can allow real time adaptation of the virtual environment taking into effect the person’s current emotional state and hence allow the system to evolve the story/scenario around that input.

Media feedback and output - Here, realtime feedback can be obtained from a person while he/she is listening to music or any other media. The emotional feedback can allow the intelligent playback system to identify the kind of content and media that the person likes and hence create a curated list of similar songs/media.

It also opens up avenues in the form of big data for corporations which will allow them to find which content/media that the people are more attracted to.

Moreover while using any media, for example music, the wearable interface can also amplify the overall experience of the user by stimulating or evoking emotions pertaining to the song via the interface and augment the pleasure.

New emotion based organic communication platforms - The wearable interface tries to make long distance communication more organic by helping convey real time emotions of people during text based/other kinds of conversation. The interface can allow sensing and transfer of emotions from one end and also mimic the same emotion on the other end using electrical stimulation of the corresponding facial muscles.

Training autistic individuals to understand emotions - Most autistic individuals are unable to understand or recognize emotions due to the improper development of the amygdala and temporoparietal junction in the brain. Using this wearable interface, the autistic person can understand the emotions of another person who is wearing the interface by the stimulation of his/her own facial muscles through the second interface they itself are wearing and thus mimic and evoke the same emotion in the individual. This system can hence be used as a training platform to help autistic individuals understand facial expressions and thus support them in becoming better social creatures.

Gaming - Here, the emotion of an individual can be fed to the system to allow remodelling of the storyline of the game to cater to the feelings of the user.

Lie detection - This system proposes the creation of a new lie detection platform which can sense all the micro expressions the face makes while communicating verbally. Paul Ekman has proved in his work that these micro expressions, which are made unconsciously by the brain are reliable for detecting whether a person is honest about something or not. Thus the wearable emotion interface can allow the development of a new breed of lie detectors. The TV series: Lie to Me is based completely on this concept.

Facial muscle based control for paraplegics - This interface can allow paraplegics to use their facial muscles to control digital systems or even move a wheelchair in the desired direction.

9. Conclusion

The concept presented here also raises the fundamental question of whether emotions should be manipulated (recognised and induced)?

For answering this, I rely on the expertise of R.W.Picard (Founder, Affective Computing Research Group, MIT Media Lab) who states that there is nothing wrong with either sensing emotions nor invoking them; the worst that could happen is emotion manipulation for malicious purposes.This sort of manipulation is already happening (cinema, music, marketing, politics, etc) why not use computers to better understand it (R.W.Picard. Affective Computing, M.I.T Press, Cambridge, MA)

The need for a wearable emotion interface is expressed clearly in H. C. Ning, C. C. Han, C. H. Yuan, “The Review of Applications and Measurements in Facial Electromyography,” Journal of Medical and Biological Engineering, vol. 25, pp 15-20, 2004. According to their research, while recording the EMG, they hope to decrease the number of electrodes on the face so that the design of the system is easier and reduces development cost. Furthermore, the existence of electrodes will affect the movement of facial muscles and decrease validity of measurement. The facial electrodes will impair the appearance of subjects and reduce acceptance for facial EMG products. For design of facial sensor, it is a critical task to find the position of interesting facial muscles and fix the electrodes on the face without disturbing facial movement. As a result, more research would have to be done as explained in section 7 to create systems that can understand human emotions and cater more relevant content to us.