Learn about the relationship between edge computing and AI and see how they can come in handy for your projects!

Edge computing and artificial intelligence seem to get more important every day. However, many makers don’t know a lot about either topic.

In this article, I’ll try to give you a short overview of both topics. Note that these are incredibly broad fields, and it won’t be possible to cover them in great detail. My goal is to give you a good basic understanding of edge computing and AI so that you can start doing your research effectively.

Edge Computing vs. Cloud Computing

In the last decade, everything and everyone seemed to use the cloud. But what is cloud computing? Simply put, it’s the availability of remote computer system resources when you need them.

The important aspect of the cloud is that these computer resources are not within your network, nor are they typically managed by the user. Usually, these resources are shared, but it’s also possible to have a private cloud, which is typical for large companies. Most of us know and use cloud computing for data-storage and media-streaming applications.

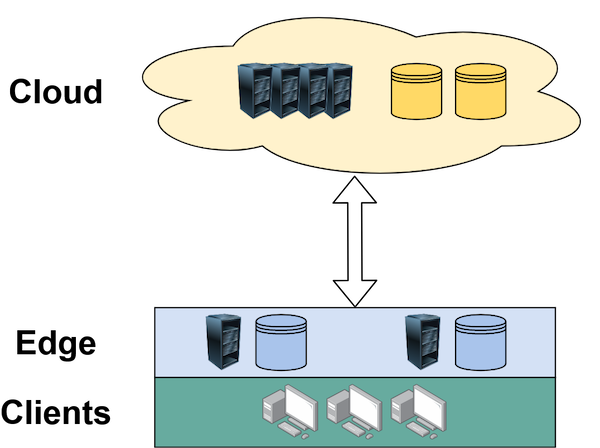

If we bring the connection closer, however, we might end up with designated edge servers. And this is where the main problem comes into play when you try to define edge computing because it could be any computer resource that’s somewhere between the cloud and the user. These edge nodes can perform many different tasks, like filtering and data-storage, just like computers in the cloud.

The relationship between the cloud, edge networks, and client devices.

So edge computing happens at the border of the network. This is somewhere between the cloud and the user, but close to the user. It usually concentrates on processing real-time data, typically coming from sensors and user input. Cloud computing, however, often operates on big data.

A Brief Description of AI

Artificial intelligence is another often-misused term in modern technology. I won’t go into too much detail here, because this field is huge and can easily fill textbooks with at least 1000 pages. But I think it's important to discuss one definition: what AI can be.

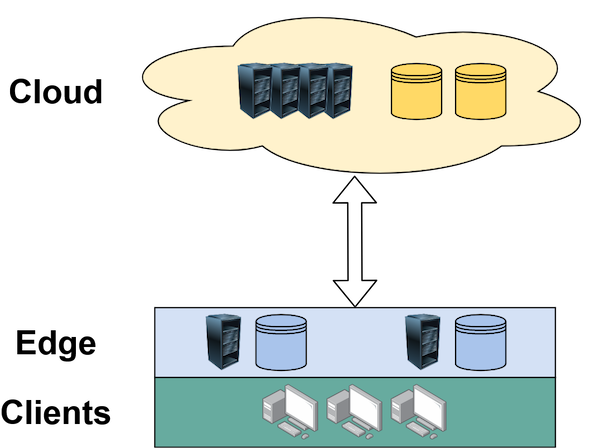

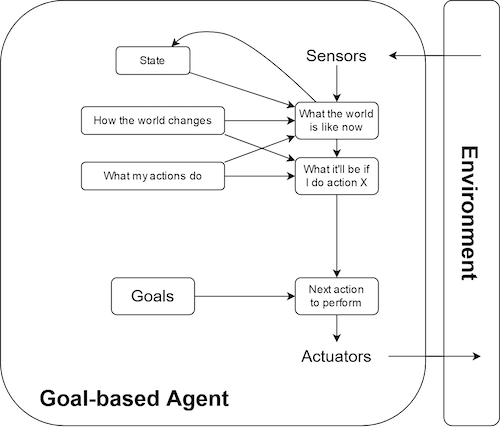

I think AI is best described as an entity that perceives its environment and takes an action that maximizes its chance of success. In this context, devices are called agents and the PEAS system (which stands for performance, environment, actuators, and sensors) is often used to describe them. However, AI is also often used to describe machines that can learn and solve problems.

A theoretical model of a goal-based agent.

Let me give you a short example of an agent. As mentioned, PEAS is often used to describe them.

Let’s suppose our agent sits in a factory and decides whether an assembled product is ready to be shipped to a customer or if it should be discarded. The performance factors are the reliability for detecting errors and the time to find faulty products. The environment is the conveyor belt on which the pieces are traveling. The actuator can be an arm that removes faulty pieces, and the sensor is a camera.

How Does AI Fit Into Edge Computing?

Such agents can be almost anything. An intelligent agent can, for example, detect good and bad results from sensor readings. And this is one example where you can link edge computing to AI.

If you want to store the results from hundreds or even thousands of different sensors in a data-center somewhere in the cloud, it would be fantastic if you could only upload reasonable results. If you place one (or multiple) edge node(s) between your sensors and the cloud, you can train a neural network, for example, to discard unwanted results before they get uploaded, just like the intelligent agent in the example above would do with faulty products.

Additionally, the data can also be buffered and uploaded in batches. This will further reduce traffic on the network while also decreasing the load on the cloud computer, lowering the cost if you have to pay per processed megabyte.

Why Should I Use Edge Computing?

There are various factors for why you should consider adding one or more edge nodes to your computer network, depending on your specific use-case.

As described above, you can reduce the amount of uploaded data and the produced overhead. Furthermore, answers from a remote data-center in the cloud aren’t instant. Other important factors are security, scalability, and reliability.

A Quick Review of Edge Computing and AI

In conclusion, it’s not easy to clearly define either of these two terms, especially because they are often misused or used in various contexts.

However, Edge computing happens somewhere between the cloud and the user, on the edge of the user’s network. One definition of AI is a device that can perceive its environment and act accordingly to maximize the expected result.

You can gain some benefits when you combine the two, for example by filtering data from sensors before it gets uploaded to a remote data-center.